Facing the Big Five G

Published by Gbaf News

Posted on September 25, 2018

10 min readLast updated: January 21, 2026

Published by Gbaf News

Posted on September 25, 2018

10 min readLast updated: January 21, 2026

With the recent release of latest 3GPP 5G New Radio (NR) standards and the first true 5G networks potentially coming on stream in 2018, 5G-Ready transport networks continue to evolve into semi-unmapped territory. The forthcoming generation is not a forklift replacement so much as an extension and evolution of existing 4G mobile transport infrastructure – but “let’s wait and see” is not an option. Looking closely at their infrastructure through a 5G lens, go-ahead mobile operators see opportunities to ensure that all upgrades and extensions will be steps in the right direction – towards the 5G future.

Jon Baldry Metro Marketing Director at Infinera explains.

With the first commercial 5G rollouts now announced for 2018, it is highly likely that we can expect the first 5G handsets to be announced at next year’s Mobile World Congress – initially at a premium price for people who would demand the ultimate despite minimal 5G network availability.

It’s human nature – like desiring a Ferrari in a world with blanket 70mph speed limits.

It will make headlines, for sure, but the real news is not so obvious. With the transition from 2G to 3G to 4G the public has got used to the idea that a new standard means a whole new network, with providers competing to be the first to roll it out. Operators are still vying to be first to market with 5G, but this time we are not replacing the 4G network,we are extending its reach into smaller 5G cells and evolving towards new 5G standards. From the outset, 4G was not set in stone: ever since its launch there have been a continuing series of further 4G releases to add new functionality, many of them geared towards supporting new 5G networks.

Why 5G?

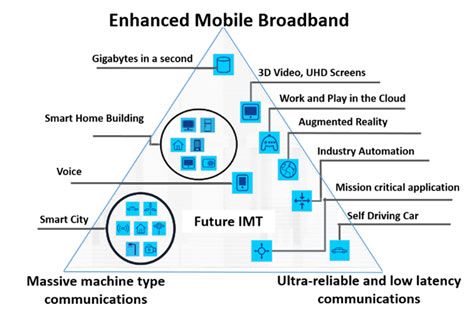

The real driver for 5G is not a single set of applications, but a broad group that are typically clustered around one of three centres of gravity:

Figure 1: The 5G Services Ecosystem source ITU-T

Enhanced broadband naturally extends the capabilities of 4G bandwidth per user to enable 5G to challenge in the residential and business broadband markets. 5G will deliver at least ten times faster than 4G to enable cloud storage of HD video clips and support for 4K video.

Massive machine type communications is geared towards the Internet of Things (IoT) with up to a million connections per square kilometre, or 100 devices in a room, where 4G now manages a few thousand per cell. Although initial IoT deployments have majored on a population of very simple devices such as traffic sensors and smart meters sending and receiving relatively tiny pulses of data, these are just the ripples preceding a potential tsunami.

Connecting surveillance cameras to the system will add a lot of traffic, but it is forthcoming ultra-reliable and low latency communications applications such as driverless cars, industrial control and telemedicine that will really pile on the pressure. These applications require secure communications that never fail, with network latency dropped by a factor of 10 from 4G standards to an impressive 1ms to give undetectable response times.

Many of the envisaged 5G services will use a blend of these capabilities, for example virtual reality (VR) will require both high capacity and ultra-reliability with low latency. VR is more than just a game: it has potential to transform education, training, virtual design and healthcare. If a surgeon is to diagnose accurately, or even perform a remote operation, the resolution of the virtual reality image must be close to the resolution of a human retina.This requires at least 300 Mbps, almost 60 times higher than current HD video, with undetectable latency and of course ultra-reliability.

These are the sort of facts, figures and exciting applications that make the headlines. The real work, however is to provide a whole network that can support such service levels. What does this mean for the mobile provider who already has a huge investment in 4G infrastructure?

How to get there

5G achieves its massive bandwidth by operating on higher frequency bands, in the millimetre wave spectrum. At these frequencies the signals do not travel as far and they are more readily obstructed by walls, obstacles, rain or mist, requiring clear line-of-sight access.

A slow download to a smartphone, or a break in a phone conversation, is annoying but seldom disastrous – and earlier technologies were no better. Extreme reliability is, however, essential for driverless cars or other critical 5G services. We cannot afford any blind spots, where a building shadows the signal.

Full availability means that many more smaller cells must be added to the network. Existing 4G access must be extended like capillaries in a fine network of small cells feeding back to existing transport arteries. This requires a huge investment, partly compensated by the fact that 5G antennae can be much smaller and use less power. They will also conserve power by focusing signals more accurately rather than beaming equally in all directions at once.

To support this more dynamic cell behaviour, we need greater intelligence towards the edge.As well as using multiple antennae to aim signals more efficiently, 5G will also recognise the type of signals being sent and reduce power when less is needed. Having a host of small cells in close proximity also enables Coordinated Multi-Point (CoMP) – a technique whereby nearby base stations respond simultaneously and cooperate to improve quality of service.

While the new radio access network does its best to minimise latency, it comes to nothing if some signals have to travel all the way to and from a distant data centre. So another trend will be for Mobile Edge Computing (MEC) – where caching, compute power and critical applications will be pushed closer to the network edge to reduce latency and congestion in the transport network and optimize quality of service.

Fibre-deep challenges

Existing cellular networks rely heavily on fibre optic links to connect cell towers to the core network. Although high speed wireless can bridge the gap when time or cost makes it impossible to lay fibre, the only technology to consistently support 5G’s surge in demand and quality of service will be fibre. Each cell of a capillary 5G network is far smaller than a typical 4G, but there are so many of them and the applications so demanding the total bandwidth demand in the transport network will be massive.

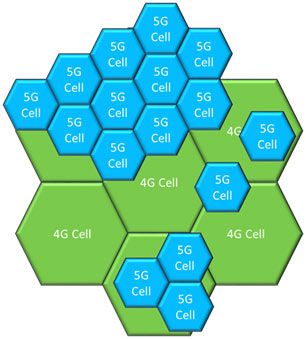

Figure 2: 4G and 5G Cell Coverage

So it is necessary to extend fibre as close as possible to the small cells in order to meet this demand. This “fibre-deep” evolution will not be achieved by simply multiplying existing fibre equipment and building it out into the metro space as needed – that would be a colossally expensive operation both in terms of real estate and equipment costs. Instead there will be a need to install many more compact and power efficient network nodes, wherever they can be economically accommodated. This could include remote telecom huts, street cabinets, cupboards or cell sites – locations quite unsuitable for housing racks of equipment that is optimized for a controlled telco environment.

Selecting suitable equipment will no longer be a simple matter of asking a preferred supplier to meet the required performance levels, it will be necessary to look much more closely at the specifications to see if devices are sufficiently rugged, compact and power efficient to survive where space and power supply are limited, and temperature and humidity levels more extreme. With a massive increase in the amount of fibre installations, commissioning and operating expenses will also soar, unless extra care is taken to choose the most compact, reliable and easy to maintain optical equipment.

Leading optical equipment suppliers are well aware of these challenges and are developing solutions more suitable for fibre-deep networking. The latest access optimised units can deliver 100Gbps at a mere 20 watts, packing over 400Gbps into one standard rack unit – about eight times the density of previous generation equipment.

What’s more, the industry has been working to bring the International Telecommunications Union’s (ITU) vision of autotuneable WDM-PON optics up to the performance levels required to support the reach and capacity requirements of 5G networks. This eases the pressures of commissioning and maintaining extensive DWDM optical networks by replacing the technicians’ burden of determining and adjusting wavelengths at every installation. Autotuneable technology will automatically select the correct wavelength without any configuration by the remote field engineer enabling them to treat DWDM installations with the same simplicity as grey optics.

Pressure on the transport network

This far denser 5G access environment, even with greater intelligence located towards the edge, will put heavy pressure on the upstream infrastructure. In between times of change, buying patterns tend to stabilise towards the convenience of familiar, single vendor provision. With the shift to 5G we are already seeing greater competitive pressure between mobile operators, and between wholesale operators hosting 5G transport services. This is forcing buyers to demand higher performance, greater efficiency and more demanding specifications – driving a shift towards more aggregated best-of-breed solutions.

Higher performance is not all that is needed, there other significant changes taking place as 4G networks evolve towards 5G. Datacenter technology, such as spine leaf switching and network slicing, will increasingly migrate to the transport network to provide the flexibility to support more distributed intelligence and the need for MEC. Where 4G started with high performance dumb pipes connecting cell towers to the core, we are now evolving, towards a more flexible software-defined transport architecture.

As well as greater capacity, there are other demands that will not be met by many existing optical solutions.Among the refinements required for 5G, Carrier Aggregation enables the use of several different carriers in the same frequency bands to increase data throughput, rather as CoMP (described above) makes use of neighbouring cells. These solutions require new levels of synchronization precision, as well as low latency. Mobile operators now buying equipment need to look closely at the specifications to ensure that they are not investing in systems that will become obsolete as 5G rolls out. There are already some nominally 4G mobile transport networks that meet the demanding 5G synchronization and latency specifications.

Conclusion

5G-readiness is an ongoing development, and we can expect more early announcements of 5G services on the basis that they meet 5G speeds or other criteria, without providing the full 5G mobile service. Like owning a Ferrari, it’s a combination of marketing hype and status. Providers and nations are understandably keen to demonstrate 5G way ahead of the timescales favoured by the 3GPP standards body.

Major sporting events, with their massive global TV coverage, offer a stunning opportunity for operators to showcase their 5G capabilities. The 2012 London Olympics were the first “smartphone Olympics”, where spectators could simultaneously view the games close-up on their handsets. The 2020 Summer Olympics in Tokyo and the 2022 Winter Olympics in Beijing will vie with each other to highlight the way these nations are driving mobile 5G, as Europe once drove 3G and North America drove 4G. Europe and North America are also looking to showcase 5G, such as Elisa’s recent announcement of what is claimed to be the world’s first commercial 5G service in Finland. By 2022 we can expect there could be a significant number of Beijing Winter Olympics spectators using 5G Virtual Reality devices to spectacular effect.

Meanwhile mobile operators need to work steadily towards these capabilities with 5G-Ready mobile transport that an optimise 4G networks today and provide the high performance required for full 5G in the future. Operators can avoid investing in soon-to-be obsolete mobile transport technology, by seeking advice from experts at the leading edge of optical network equipment and design.

Explore more articles in the Top Stories category