Romance Scams: A Growing Threat and How to Fight Them

Published by Jessica Weisman-Pitts

Posted on February 13, 2025

7 min readLast updated: February 14, 2025

Published by Jessica Weisman-Pitts

Posted on February 13, 2025

7 min readLast updated: February 14, 2025

By Anurag Mohapatra, SME and Sr. Product Manager, NICE Actimize

By Anurag Mohapatra, SME and Sr. Product Manager, NICE Actimize

In an age where digital connections flourish, love has become a double-edged sword. With romance scams soaring to alarming heights, the UK Finance 2024 Report reveals a staggering 17% increase in financial losses from these scams, coupled with a 14% rise in reported cases, while the FBI's IC3 2023 report highlights over 17,823 victims in the US, collectively drained of a jaw-dropping $652 million.

Yet, these figures only scratch the surface, as an estimated 70% of victims remain silent, their stories buried beneath shame and heartbreak. Behind every statistic lies a real person—someone who believed in love, only to find themselves ensnared in a web of deceit that resulted in major financial loss. As banking institutions and regulators scramble to implement reimbursement laws, the battle against these emotional and financial predators demands a united front. With social media as the breeding ground for such fraud, tech companies and lawmakers must join forces to close the gaping loopholes that allow these scams to thrive, transforming the digital landscape into a safer haven for genuine connections.

Romance scams are no longer the work of individuals alone like the infamous "Tinder Swindler." Today these scams are perpetrated by organized criminal groups. The United Nations Office on Drugs and Crime (UNODC) reports that these groups treat scams like a business—complete with departments for generating fake identities, grooming-scamming victims and laundering money. These networks stretch across Southeast Asia, West Africa, and Eastern Europe. In Nigeria and Ghana, "Yahoo Boys" have shifted from the old "419" email schemes to romance fraud, chasing higher payouts.

Technology: A New Weapon for Scammers

Technology is giving scammers a dangerous edge. Deepfakes and AI tools make their lies more convincing than ever. AI-generated images can't be traced through simple reverse-image searches, and scammers can now appear as entirely different people on live video calls. Voice cloning tools make phone calls seem more legitimate, blurring the line between reality and deception.

Frank McKenna, reporting for Frank on Fraud, unveiled the sophisticated AI tactics employed by "Yahoo Boys." Scammers leverage the latest advancements in AI, including deepfakes to both generate fake identity documents but also create fake images and videos to scam victims on social media. The rapid advancement and accessibility of AI has led to widescale use of these by criminal groups. New research from Stanford University and Google DeepMind, shows that AI can now clone entire personalities in as little as two hours.

AI systems analyze a person's speech patterns, word choices, and emotional expressions to replicate their voice, unique mannerisms, and communication style. Criminal groups can use such technology to create digital personas that would be indistinguishable from real people. These developments underscoring the urgent need for regulation around AI's ethical use and updated fraud detection systems.

Social Media's Role in Facilitating Scams

Social media and dating apps are fertile hunting grounds for scammers because creating fake profiles is ridiculously easy. Tightening user verification might slow down scam activity, but it could also reduce user numbers—a tough trade-off for platforms focused on growth.

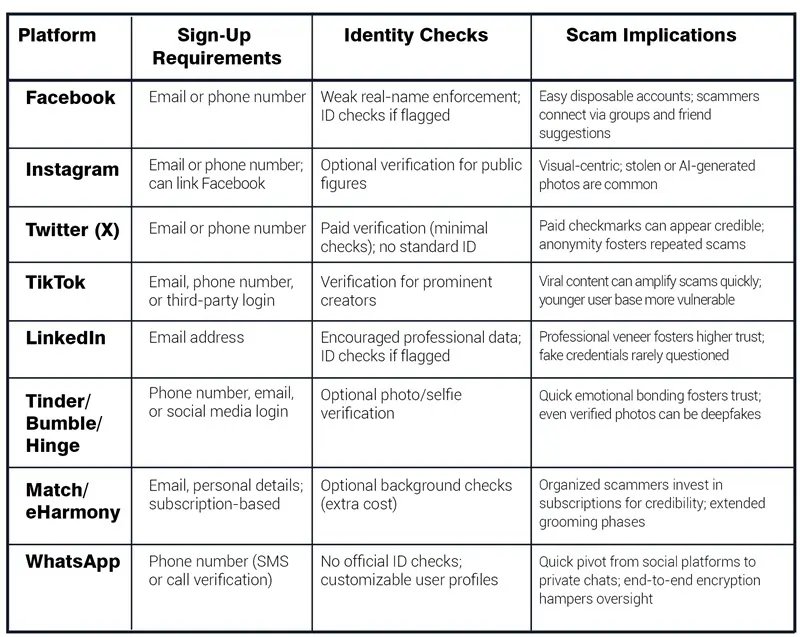

A research study conducted by TSB Bank revealed that a staggering 80% of all social media fraud cases were linked to Meta-owned companies like Facebook, Instagram, and WhatsApp. Victims often encounter scammers through fake profiles, with poor moderation allowing fraudulent accounts to thrive. The data underscores how inadequate oversight and reactive moderation contribute to the proliferation of romance scams. The table below illustrates the different sign-up requirements and identity checks employed by some of the most popular social media and dating platforms. A phone number or email is often all it takes to create multiple accounts. Platforms like Facebook and Instagram don't strictly enforce their real-name policies, allowing scammers to blend in. Most platforms act only after a scam has been reported—and by then, the damage is done.

Even when fake profiles are reported, social media platforms take minimal to no action to remove them. Social media platforms like Meta have taken action to combat organized crime groups. As reported by Axios, Meta blocked over 2 million accounts linked to scam compounds in Myanmar, Laos, Cambodia, the UAE, and the Philippines. However, experts argue that these measures are insufficient given the scale of the problem.

The Legal Shield: Section 230: why it needs revised

Section 47 U.S.C. § 230 is a law enacted in 1996 to foster innovation by protecting internet companies from liability for user-generated content. The law encouraged "Good Samaritan" moderation without incurring publisher-level liability. Although written with the spirit of innovation, at heart the law hasn't kept up with technology and how the internet has evolved.

Platforms now host billions of users and manage sophisticated content algorithms, but thanks to Section 230, they still aren't legally responsible when scammers use their services. This legal shield means platforms have little incentive to invest heavily in fraud detection.

In 2020, the US Department of Justice reviewed Section 230, proposing reforms to hold platforms accountable when they knowingly allow scams, establish clear standards for good-faith moderation, and introduce a duty of care requiring platforms to implement basic fraud detection tools.

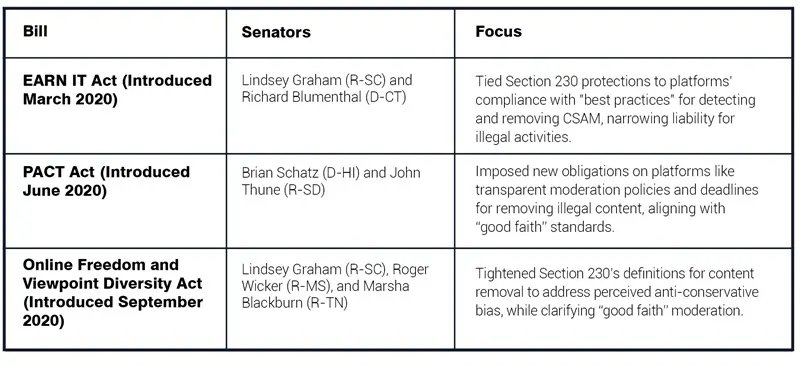

Several legislations have been introduced by a number of senators, as summarized in the table below, all seeking to narrow the broad immunity currently protecting platforms from liability

If passed, such legislation will hold platforms accountable and require them to shut down accounts that are engaged in scam activity.

The Financial Sector's Role in Combatting Romance Scams

In the U.S., transparency around scam reimbursements is limited because actual reimbursement data isn't publicly available. In contrast, the U.K. provides better reporting on reimbursement practices, with 66% of all losses related to authorized push payment (APP) fraud reimbursed in 2023. This transparency stems from the Contingent Reimbursement Model, a voluntary code signed by banks before the U.K. Payment Systems Regulator (PSR) reimbursement requirements took effect in October 2024. While reimbursements are crucial, banks must prioritize proactive measures to prevent scam transactions. Leveraging advanced technologies like machine learning, behavioral analytics, and real-time transaction monitoring can help identify and block scams as they occur.

Given the irrevocable nature of many faster payment systems and high transaction limits, real-time monitoring is critical. Fraud detection systems that utilize machine learning models can flag risky transactions, but building mechanisms and processes to intercept these transactions is equally essential. Scammers often create a false sense of urgency, leading victims to initiate and authorize transactions that result in financial loss.

Automated methods, such as push notifications through banking apps or text messages, can alert customers to potential risks. For example, a pop-up message could inform users that the intended beneficiary has been linked to three recent scam claims under investigation. Such transparency, when combined with empathetic human agents, can significantly reduce the number of romance scam transactions.

Data sharing is vital for building a collective defense against fraud. Sharing scam patterns and risk lists enables financial institutions to identify high-risk beneficiaries suspected of fraud. The Federal Reserve's Scam Information Sharing Industry Work Group has recommended enhancing this collaborative approach, emphasizing real-time information sharing and standardized data formats.

What Can Be Done to Stop Romance Scams?

Addressing romance scams requires coordinated efforts across various sectors, including social media platforms, regulatory frameworks, and financial institutions. Social media platforms need to strengthen their user verification processes and enforce stricter moderation policies. Additionally, these platforms should publish regular transparency reports that detail scam-related activities.

There is also a need to amend Section 230 to narrow the broad immunity that currently protects platforms from liability when scammers exploit their services. Recommendations from the Department of Justice for clearer "good faith" moderation standards and a defined duty of care are essential in this regard.

Banks play a crucial role by enhancing real-time fraud detection through machine learning and behavioral analytics. They should also implement robust interdiction mechanisms to prevent fraudulent transactions from being completed.

Furthermore, promoting cross-industry data sharing is vital for identifying high-risk accounts and beneficiaries. Collaboration between banks, social media platforms, and law enforcement is key to effectively curbing these scams. By taking these steps, we can strengthen defenses against romance scams, reduce financial losses, and better protect vulnerable individuals.

Anurag Mohapatra, SME and Sr. Product Manager, NICE Actimize

Explore more articles in the Business category